On Computer Speed and Culture

First Principles

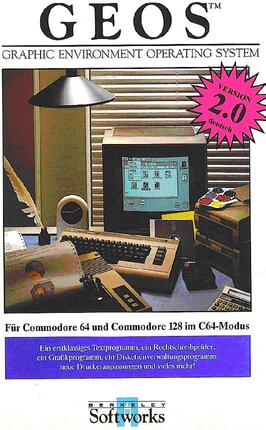

Once upon a time, there was the Commodore 64. It had, fittingly, 64 KiB of memory... which, for a personal computer, was pretty nice at the time. You did have a ton of games to choose from; there were even entire office suites written for it.

Nevertheless, 64 kilobytes has its limits. So much, that most games were written by very small teams, even single developers; there was just no more room to fit additional content into. As a comparison, 64 kilobytes is about 40 pages written by a typewriter; this is an amount that one programmer can quickly fill working just by themselves.

The amount of lines of code that you could fit into memory did stop being a constraint pretty quickly though. Filling up an entire megabyte with only code you have written is pretty hard; you can easily have hundreds of people writing code that then fits on a dozen floppy disks.

In contrast, consider neural networks. Today's state-of-the-art large language models start at requiring tens of gigabytes of video RAM. If you go much lower, you get something that's just objectively more stupid. All of the recent developments are happening now and not 20 years ago because now we have the hardware to train and run them on; this makes them somewhat similar to text editors on the Commodore.

Not first principles

One of the commonly known facts about computers is that they are getting faster and faster by each generation. We can now execute more instructions per second, transfer more data, and also store more of it.

For each kind of task to be solved by computers, there is a point in time when it becomes tractable, due the computing technology of the time reaching the minimum level required for it. Naively, you would assume that once this has been achieved, we will get better and better at solving it.

And yet, anyone who has encountered a contemporary news website and has measured its loading times, especially on something that is not the latest MacBook Pro, can see that it is more than it would take to download an entire book from the network. Some other ones take more time between a key press and anything showing up than it would take to send that one character to the literal other side of the planet & getting a response.

Most people are okay with this. Most of the people who are not, are not in the position of being able to fix it.

Before we conclude that this is an issue with web programmers who do not care about such matters, take C++ programmers instead. They are the ones who definitely care about each CPU instruction and each byte of memory... and yet, compiling something written in C++ always takes a little bit too much time. (... depending on the situation, this sometimes escalates into "a completely unacceptable amount of time"; at that point, though, there is no quick fix to the problem.)

Expectations

As it happens, computer speed typically stops being a major concern once it is fast enough; we stop paying attention until it becomes terrible. What counts as "terrible" is defined by the target audience or the developers.

In the long term, this doesn't have to do a lot with the actual, first principles computing power though. Rather, there is a slow accumulation of complexity and various issues, weakly pushing latencies upwards, against a perception that is very much human scale. Compilation times, for example, are rarely optimized down to milliseconds or end up taking multiple days, despite us having gone a comparable amount of speedup in the past decades; it's always "noticeable but not too slow". Once it's "kind of OK", we stop pushing back; the slow accumulation continues.

The location of this limit is a matter of personal or cultural preferences though. Pro gamers appreciate CRT screens due to their latency; accordingly, game developers are not OK with hundreds of milliseconds between click and effect. Lisp programmers are picky about having to restart anything or wait for compilation; as a consequence, most Lisp code just happens to have been written in a way where you don't have to.

Meanwhile, it's perfectly possible to write performant web pages that load extremely quickly. Which was... not always given; Google was winning the search engine race in the early 2000s partially because it produced results rapidly, with very little cruft added.

It's just that with modern tech, you can get the same loading times that we have already gotten used to and expect, while not actually optimizing for anything.

So we don't.